ADELPHI, Md. -- Army researchers created a new collection of video sequences known as the Robot Unstructured Ground Driving, or RUGD, dataset designed specifically to help train autonomous vehicles navigate unstructured environments like forest trails and parks.

With advancements in artificial intelligence and machine learning rapidly changing the modern landscape of warfare, Soldiers will soon require dependable robot teammates to assist them with their missions. One major area of focus for the Army lies in autonomous navigation systems.

Dr. Maggie Wigness, a computer scientist from the U.S. Army Combat Capabilities Development Command's Army Research Laboratory, strives to ease the burden on Soldiers by having robots reduce some of the risk that comes with exploration and data collection.

"The battlefield can be a very complex and dangerous environment," Wigness said. "Having robot teammates in the field with autonomous navigation capabilities would allow the Soldier to delegate tasks to a robot that are dangerous, tedious, or difficult for a human to perform."

However, while multiple datasets exist for training autonomous navigation in structured, urban environments, there is a surprising lack of available data on unstructured environments that feature irregular boundaries, minimal structural markings, and other visual challenges.

This discrepancy in training data could make robots ill-prepared for tasks such as disaster relief or off-road navigation since rugged terrain bears little resemblance to the well-paved, urban roads that most visual datasets employ. Wigness and her team at the lab's Computational and Information Sciences Directorate created the RUGD dataset to fix this problem.

"We saw the lack of data that represents unstructured environments as a serious gap in the research community," Wigness said. "So, we collected RUGD to fill this gap and help motivate further research in this area."

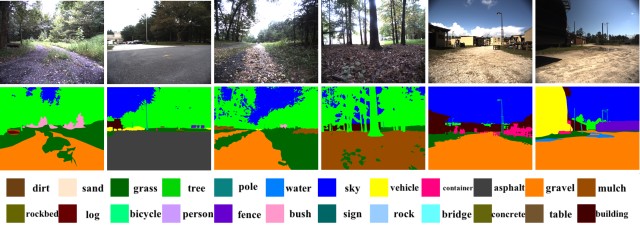

The RUGD dataset consists of footage collected from a small unmanned ground vehicle that traversed various outdoor environments from stream beds to rural compounds. Furthermore, the footage purposefully included elements such as harsh shadows, blurred frames, and obstructed viewpoints to emulate the realistic conditions commonly observed in these environments.

The researchers assembled 18 video sequences with more than 7,000 annotated frames that categorize the visual classes and define the region boundaries between the terrain and the objects within the scene. In total, the dataset contains over 37,000 images that are representative of what autonomous systems may face when navigating unstructured environments.

Wigness and her colleagues then used several baseline and state-of-the-art deep learning architectures to test the RUGD dataset and provide an initial semantic segmentation benchmark.

"The initial experiments indicate there is significant room for improvement in results," Wigness said. "The challenging characteristics of high inter-class similarity, class skew, and unstructured properties make it difficult for even state-of-the-art architectures to learn how to accurately segment these unstructured scenes."

The RUGD dataset and an accompanying research paper titled "A RUGD dataset for Autonomous Navigation and Visual Perception in Unstructured Outdoor Environments" was recently published in November at the 2019 International Conference on Intelligent Robots and Systems.

ARL scientists have already started incorporating the RUGD dataset to conduct research related to semantic segmentation and object saliency in outdoor environments.

In addition, Wigness publicly released the RUGD dataset in hopes of inspiring others to hone in on this particular facet of autonomous navigation.

"Research is often driven by available resources. By making this dataset available to the research community, we are providing a new resource that is representative of challenging real-world unstructured environment properties, and hopefully that will spark more research efforts in this domain," Wigness said. "The use of RUGD has the potential to help train better visual perception systems onboard mobile agents, which in turn will enhance autonomous navigation in unstructured environments."

______________________________

The CCDC Army Research Laboratory is an element of the U.S. Army Combat Capabilities Development Command. As the Army's corporate research laboratory, ARL discovers, innovates and transitions science and technology to ensure dominant strategic land power. Through collaboration across the command's core technical competencies, CCDC leads in the discovery, development and delivery of the technology-based capabilities required to make Soldiers more lethal to win our Nation's wars and come home safely. CCDC is a major subordinate command of the U.S. Army Futures Command.

Related Links:

U.S. Army CCDC Army Research Laboratory

Social Sharing