ABERDEEN PROVING GROUND, Md. -- Most real-life robots are a long way from their sentient science-fiction counterparts. You could even categorize them as a little bit helpless, prone to falling over. That may be cute for a robotic toy dog, but it is decidedly undesirable for a robot in the field tasked with clearing improvised explosive devices (IEDs).

"We hope that we can prevent that situation from ever being a problem again, where the robot can right itself and return to the vehicle so the Soldier isn't tempted to risk his own life for the sake of a robot," said Dr. Chad Kessens, a researcher at the U.S. Army Combat Capabilities Development Command's Army Research Laboratory.

In 2011, Kessens took a training course with Soldiers in which they learned how to use robots for finding and identifying roadside IEDs.

"Through my interactions with the trainers who had actually used robots in Iraq and Afghanistan, I found out that one of the major problems they have is the robots they're using for this turn over more often than they would like-even once is a problem," Kessens said.

One of the Soldiers that Kessens worked with said that, after 20 minutes of trying and failing to right his robot-which was potentially near an IED-he got out of his vehicle, hustled over to the robot and rescued it because he valued the robot so much. When Kessens heard about this Soldier's experience, he immediately wanted to solve the problem.

"My role," Kessens said, "is to do robotic manipulation research that will positively impact future Army operations."

He and the rest of the lab's Autonomous Systems Division's advanced mobility and manipulation team want to understand how robots can right themselves so that the future Soldier has semi- or fully autonomous robots on the battlefield.

BOT FLIPPING 101

The problem, as Kessens sees it, is that today's robots are unable to reorient themselves after experiencing a disorienting event, like falling into a ditch or being knocked over. The solution, then, is to give robots the ability to self-right. To do that, the robots need to be more aware of the space they are in and how they can move in that space. Kessens has developed a two-part software package, referred to as self-righting software, to give the robots that ability. The first part of the software is for analyzing the robot's structure, while the second part is for planning and executing self-righting maneuvers.

"My job as an Army researcher is to really understand the entire problem," Kessens said. His main goal is to understand the robot's morphology using the analytical part of the self-righting software. Morphology is the study of the forms of things; in this instance, Kessens is studying the robot's shape, where its joints are and how they are oriented in relation to one another, how heavy the limbs are relative to one another, and all the other different parameters that go into the physical makeup of a robot. "How does [the morphology] affect its ability to self-right, and under what circumstances can it not self-right?"

The goal of the software is to encompass as many varieties of robotic systems as possible; therefore, the research has to be relatively generic. However, all research has to start with a set of control parameters: Kessens and the research team assume that the software will be used for robots with rigid bodies, and that have sensors that can determine what configuration the robots are in and in what direction gravity is acting, Kessens said.

"So we need something, a sensor like an IMU-inertial measurement unit-or we could use just accelerometers or inclinometers. There are different ways to get that information," he said.

An IMU is the sensor in a smartphone that changes the screen from vertical to horizontal, and vice versa, when the phone is turned sideways-what happens when you flip your phone to look at a photo, for instance. IMUs "are relatively cheap and pretty ubiquitous, so it's not a leap to assume that a robot would have such a sensor," Kessens said.

The team is also considering the size of the robot in its analysis. Larger robots tend to have more computing power, but robots that could fit in the palm of your hand are limited in how much memory they have and how much processing they can do in real time, he said.

"When I talk about having these two pieces [of software], the analysis piece can happen before the robot ever hits the field, and it will generate maps for the robot that can be stored fairly compactly, in terms of memory ... but [the robot will] still be able to use [the maps] without requiring a great deal of processing," Kessens said.

The idea, he continued, is to use the analytical side of the software to thoroughly assess the robot's morphology beforehand and then capture that information in a compact form to run as a separate piece of software on the robot that the robot would then use to navigate and self-right. The assessment determines all of the orientations a robot could stably sit in for a given joint configuration on a given ground angle, Kessens said.

The software figures out how those states connect with one another, forming the map-kind of like the way a human remembers how to get up a certain way from a particular starting position, such as lying on your back. "Once you've done it, you know how. You don't have to think about it much because you can access that knowledge," he said.

Of course, not all of the Army's current robotic systems have the same morphology-not all of them are tracked with a single arm, like iRobot's 510 PackBot or Qinetiq's Talon-and future robots will be even harder to predict.

"Future robotic systems may be significantly more complex-we may have a humanoid robot, and we're going to want that humanoid robot to be able to pick itself up," Kessens said. This futuristic variety presents an interesting challenge for Kessens: How do you tell a variety of robots, both current and future, how to right themselves? Through painstaking research, careful analysis, a little bit of trial and error and a small Lego-like robot.

ROBOT IN MOTION

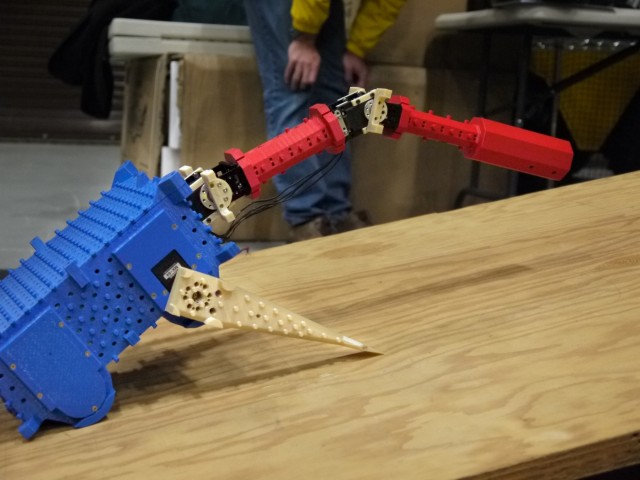

The robot used in the team's research is made of a shoebox-sized blue base, two white, tapered arms on each side, and a red, jointed appendage that carries a counterweight. (All the parts were 3D-printed on the team's own printer.) It can sense its own orientation and send a signal back to the researcher asking permission to self-right. However, this robot is just a research platform, said Geoff Slipher, chief of the ARL's Autonomous Systems Division. He noted that research is still in the early stages, and emphasized that the test robot's capabilities aren't what the team envisions for final systems.

"It's not intended to be anything that the Army would ever intend to field. ... It allows us to ask and answer research questions. So, our product is not a robotic system; our product is knowledge about how to make robotic systems perform better," Slipher said.

In a demonstration, Jim Dotterweich, a researcher working with Kessens, placed the research robot on a wooden ramp on the floor in the center of a room-sized square, steel frame. The frame, or rigging, held motion-capture cameras that in an actual experiment would record the robot's movements. The robot's Lego-like exterior allows researchers to attach reflective globes-motion-capture markers-of varying sizes in hundreds of configurations. The camera system locates the markers in the near-infrared spectrum to find joints and record dynamics of the robot, Dotterweich explained.

Dotterweich conducted the demonstration hunching over a computer on a fold-out table to send the self-right command to the robot. Slowly, the robot pushed itself halfway upright using its arms. And then, in one quick movement, it twisted and jumped to a fully upright position, drawing a gentle cheer from the other researchers.

For their research, Kessens and his team generated a series of maps for different ground angles-different degrees of inclination, like a steep slope-using their software. They start the robot in varying configurations, such as lying upside down or on its side, before running a path-planning algorithm to move the robot through the map, Kessens said. "We generated experimental data and matched it to the model data and showed that, yeah, our maps are doing a pretty good job of saying what states the robot could actually be in," he said. The team also conducted several experiments wherein the robot would be given a random starting configuration before righting itself.

The self-righting software can be adapted to most rigid-body robots. Anything that has arms, legs, wings or flippers-in this case, a rigid appendage that is used specifically to flip a robot over, not to be confused with aquatic flippers-can be used. "Things that are soft or curve continuously, like an elephant trunk, would be a lot more difficult for the software to handle," Kessens said.

In August, ARL partnered with Johns Hopkins University Applied Physics Laboratory to assess the self-righting ability of the U.S. Navy's Advanced Explosive Ordnance Disposal Robotic System-a lightweight, tracked robot similar to the PackBot that can be carried like a backpack. The self-righting software helped to determine the best ways for that system to self-right with the assistance of the Hopkins lab's range adversarial planning tool. The tool, which uses adaptive sampling-a method to efficiently search the space of possible robot configurations-helped the software work faster to generate self-righting maps for the Navy's system, Kessens said. In other words, the planning tool quickly found different ways the robot could be configured, which allowed the self-righting software to plan maneuvers faster.

CONCLUSION

Moving robots around, from a simple nudge to an outright backflip, may seem unimportant in the grand scheme of things. Sure, your bomb-sniffing bot being able to right itself is useful and keeps you safe, but what does robotic mobility really mean for the Army?

The software being developed at the laboratory can help the Army create its own robotic systems and help the Army purchase commercial systems, Kessens said. Understanding the self-righting abilities of commercial systems gives the Army a reference point for comparing robots, he explained.

The self-righting software in particular, Slipher said, is relevant to the development of the Next Generation Combat Vehicle-some of these vehicles will be optionally manned or fully autonomous in the future.

"We can envision a circumstance where those robots are out in a situation far away from help, either human or other robotic partner help, where they would roll over or need to right themselves," he said. "And so the basic research that Chad [Kessens] is doing is laying the groundwork for a transition path into larger robotic systems so we understand the physics and how the autonomy and the physical substantiation of the robot, how those two things interact, so that when ... we actually have a design for a vehicle ... then we can understand, OK, here are the requirements that would feed into that in order to build a self-righting capability."

The Army is interested in making robots go fast and making them agile and adaptive, Slipher said, and though technology like the Massachusetts Institute of Technology's cheetah robot is interesting in terms of raw physicality, it's not quite what the Army's after. "What we want is capability, that capability needs to have a purpose, and that purpose ... needs to be able to enhance some sort of mission-effectiveness," Slipher said.

This article is published in the Spring issue of Army AL&T.

_____________________________________

The CCDC Army Research Laboratory (ARL) is an element of the U.S. Army Combat Capabilities Development Command. As the Army's corporate research laboratory, ARL discovers, innovates and transitions science and technology to ensure dominant strategic land power. Through collaboration across the command's core technical competencies, CCDC leads in the discovery, development and delivery of the technology-based capabilities required to make Soldiers more lethal to win our Nation's wars and come home safely. CCDC is a major subordinate command of the U.S. Army Futures Command.

Social Sharing