ABERDEEN PROVING GROUND, Md. -- Researchers at the U.S. Army Research Laboratory and The University of Texas at Austin have developed new techniques for robots or computer programs to learn how to perform tasks by interacting with a human instructor.

The findings of the study were presented and published Monday at the Thirty-Second Association for the Advancement of Artificial Intelligence Conference on Artificial Intelligence in New Orleans, Louisiana.

The purpose of the AAAI conference is to promote research in artificial intelligence and scientific exchange among AI researchers, practitioners, scientists and engineers in affiliated disciplines.

"AAAI is one of the premiere conferences in artificial intelligence," said Dr. Garrett Warnell, ARL researcher. "We are excited to be able to present some of our recent work in this area and to solicit valuable feedback from the research community."

ARL and UT researchers considered a specific case where a human provides real-time feedback in the form of critique. First introduced as TAMER, or Training an Agent Manually via Evaluative Reinforcement, a new algorithm called "Deep TAMER" is introduced. It is an extension of TAMER that uses deep learning - a class of machine learning algorithms that are loosely inspired by the brain - to provide a robot the ability to learn how to perform tasks by viewing video streams in a short amount of time with a human trainer.

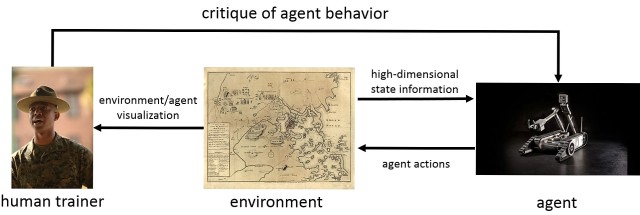

Researchers considered situations where a human teaches an agent how to behave by observing it and providing critique, i.e., "good job" or "bad job" --similar to the way a person might train a dog to do a trick.

ARL and UT Austin jointly extended earlier work in this field to enable this type of training for robots or computer programs that currently see the world through images, which is an important first step in designing learning agents that can operate in the real world.

"This research advance has been made possible in large part by our unique collaboration arrangement in which Dr. Warnell, an ARL employee, has been embedded in my lab at UT Austin," said Dr. Peter Stone. "This arrangement has allowed for a much more rapid and deeper exchange of ideas than is typically possible with remote research collaborations."

Many current techniques in artificial intelligence require robots to interact with their environment for extended periods of time to learn how to optimally perform a task. During this process, the agent might perform actions that may not only be wrong (a robot running into a wall), but catastrophic (a robot running off the side of a cliff). Researchers believe help from humans will speed things up for the agents and help them avoid potential pitfalls.

As a first step, the researchers demonstrated Deep TAMER's success by using it with 15 minutes of human-provided feedback to train an agent to perform better than humans on the Atari game of bowling -- a task that has proven difficult for even state-of-the-art methods in artificial intelligence.

Deep-TAMER-trained agents exhibited superhuman performance, besting both their amateur trainers and, on average, an expert human Atari player.

ARL researchers believe the Army of the future will consist of Soldiers and autonomous teammates working side-by-side. While both humans and autonomous agents can be trained in advance, the team will inevitably be asked to perform tasks in new environments they have not seen before. For example, search and rescue or surveillance. In these situations, humans are remarkably good at generalizing their training, but current artificially-intelligent agents are not.

"If we want these teams to be successful, we need new ways for humans to be able to quickly teach their autonomous teammates how to behave in new environments," Warnell said. "We want this instruction to be as easy and natural as possible. Deep TAMER, which requires only critique feedback from the human, shows that this type of real-time instruction can be successful in certain, more-realistic scenarios."

Over the years, several artificial intelligence researchers have considered a number of ways to use humans to quickly teach an autonomous agent to perform a task. One very popular method is called learning from demonstration, where the agent watches a human perform the task and then tries to imitate the human.

But what if there isn't someone who can demonstrate the task? Any basketball fan can tell you that you don't have to know how to shoot a three pointer in order to be able to tell if someone else is good or bad at it. Allowing autonomous agents to leverage this type of knowledge via critical feedback from a human was the focus of TAMER, developed by collaborator Dr. Peter Stone, a professor at The University of Texas at Austin, along with his former Ph.D. student, Brad Knox.

"TAMER is excellent work, but it implicitly requires an expert programmer to provide the agent with a special kind of advanced knowledge," Warnell explained. "With Deep TAMER, we no longer need the expert programmer."

Additionally, using Deep TAMER the researchers found the autonomous agents were able to achieve super-human performance. "They learned so well that they were quickly able to perform better than their human instructors," added ARL researcher Dr. Nicholas Waytowich. "This is an exciting prospect as it breaks the notion that a student is only as good as the teacher."

In the near term, researchers are interested in exploring the applicability of their newest technique in a wider variety of environments: video games other than Atari Bowling and additional simulation environments in order to better represent the types of agents and environments found when fielding robots in the real world.

"This effort, supported by a Directors Strategic Initiative award, represents our initial steps in understanding the role of humans in human-AI interaction, in particular understanding how humans can train AI agents to perform tasks," said Dr. Vernon Lawhern, ARL researcher. "In addition, it is also important to try and minimize the amount of human interaction required, as having a human constantly teach AI is infeasible in most situations. Therefore, understanding not only what to convey to an AI agent, but how to convey it, and how to best use that information, will be important to developing flexible human-AI teams of the future."

Ultimately, the researchers want autonomous agents that can quickly and safely learn from their human teammates in a wide variety of styles such as demonstration, natural language instruction and critique.

---

The U.S. Army Research Laboratory is part of the U.S. Army Research, Development and Engineering Command, which has the mission to provide innovative research, development and engineering to produce capabilities that provide decisive overmatch to the Army against the complexities of the current and future operating environments in support of the joint warfighter and the nation. RDECOM is a major subordinate command of the U.S. Army Materiel Command.

Social Sharing