PLAYA VISTA, Calif. (May 5, 2015) -- New research aims to get robots and humans to speak the same language to improve communication in fast-moving and unpredictable situations.

Scientists from the U.S. Army Research Laboratory, or ARL, and the University of Southern California Institute for Creative Technologies, or ICT, are exploring the potential of developing a flexible multi-modal human-robot dialogue that includes natural language, along with text, images and video processing.

"Research and technology are essential for providing the best capabilities to our warfighters," said Dr. Laurel Allender, director of the ARL Human Research and Engineering Directorate. "This is especially so for the immersive and live-training environments we are developing to achieve squad overmatch and to optimize Soldier performance, both mentally and physically."

The collaboration between the Army and ICT addresses the needs of current and future Soldiers by enhancing the effectiveness of the immersive training environment through the use of realistic avatars, virtual humans and intelligent agent technologies, she said.

For ICT, an Army-sponsored university affiliated research center, the study builds on a body of research in creating virtual humans and related technologies that are focused on expanding the ways Soldiers can interact with computers, optimizing performance in the human dimension, and providing low-overhead, easily accessible and higher-fidelity training.

The mission of the Los Angeles-based institute is to conduct basic and applied research and create advanced immersive experiences that leverage research technologies and the art of entertainment and storytelling to simulate the human experience to benefit learning, education, health, human performance and knowledge.

Toward that goal, much effort focuses on how to build computers - virtual humans and also robots - that can interact with people in meaningful ways.

"Our scientists are leaders in the fields of artificial intelligence, graphics, virtual reality and computer and story-based learning and what is unique about our institute is that they bring their disparate expertise together to find new ways to solve problems," said Randall W. Hill Jr., ICT executive director. "Being managed by ARL also provides great opportunities for collaboration and for aligning our research priorities with Army needs."

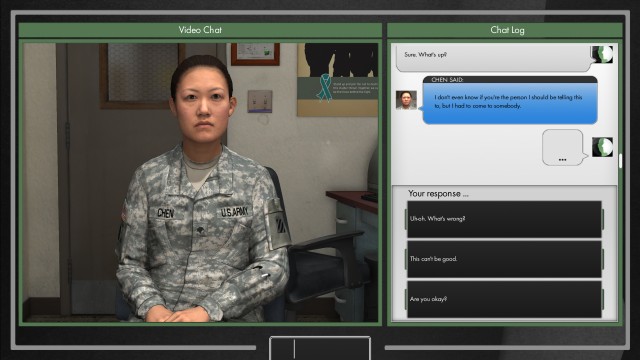

ICT's interactive virtual humans serve as mentors, role players, screeners and more. Some of these autonomous intelligent agents are designed to help develop leadership skills or to help prevent suicide, sexual assault and harassment.

Researchers are advancing techniques and technologies for allowing them to speak, understand, move, appear and act in ever more believable ways. Their work in these areas has led to virtual human research efforts that inform fields beyond virtual humans, including robotics.

Studies of emotion and rapport are leading to computational systems that communicate more effectively.

Ellie, one of ICT's most advanced virtual humans, can read and react to human emotion by sensing smiles, frowns, gaze shifts and other non-verbal behaviors, as well as analyzing the content of the speech. She can engage in dialogue, deciding when to prompt for more information, or give empathic feedback to a user response. Ellie has interviewed more than 600 people as part of ICT's SimSensei project, a Defense Advanced Research Projects Agency-funded effort to help identify people with depression and PTSD.

It turns out Ellie is good at her job. A recent study suggests people, who spoke to Ellie, were willing to reveal more to her than to a real person.

"Our group has been working since 2000 on studying human dialogue, developing computational models of dialogue, building dialogue systems to interact with people and building dialogue components of integrated virtual humans," said David Traum, director of the ICT Natural Language and Dialogue Group. "Our goal is to create computational models of purposeful communication between individuals, and it is gratifying that our basic research has led to a variety of Army applications."

ICT virtual characters and supporting architecture contributed to the Army's Intelligence and Electronic Warfare Tactical Proficiency Trainer. Within the Program Executive Office Simulation, Training, and Instrumentation, known as PEO STRI, a Project Manager Constructive Simulation value engineering proposal estimated that the project saved the Army close to $35 million by incorporating ICT-based natural language capabilities.

Other applications include the virtual Sgt Star, who answers questions about Army careers for the Army Accessions Command and Radiobots, dialogue systems that could function as radio operators for constructive simulations. This frees up operators from routine communications and data entry.

Current applied projects using ICT natural language research include the Virtual Standard Patient, or VSP, and Emergent Leader Immersive Training Environment. VSP allows educators to create virtual role players for medical students to practice interview and diagnostic skills.

Natural language understanding, or NLU, and dialogue management technology, developed at ICT, allows the virtual role players to respond appropriately to student queries. An NLU component also enables Soldiers Army-wide to practice interpersonal communication skills with the virtual staff sergeants in ELITE. The trainer can be downloaded from the Mil.Gaming portal and is in use at the U.S. Military Academy at West Point, New York, ROTC, the Basic Officers' Leader Course and the Warrior Leader Course.

In their collaboration looking into developing a possible a human-robot dialogue, ICT researchers, along with their ARL collaborators, are exploring more than whether they can enable robots to function better in uncertain conditions, they are expanding the ways Soldiers will interact with robotic team members, autonomous vehicles, training and simulations.

"By developing tools and technologies for man and machine to converse with and understand one another, ICT researchers, in collaboration with the Army Research Lab and many groups throughout the Army and DOD [Department of Defense], are providing ways to better communicate, be it personal information that can lead to mental health support, or planning information for better situational awareness," said John Hart, ICT program manager at ARL-HRED's Simulation and Training Technology Center. "Their work in human-computer interaction is also paving the way for what will be possible in the future."

Now that is something to talk about.

---

This article appears in the May/June 2015 issue of Army Technology Magazine, which focuses on Future Computing. The magazine is available as an electronic download, or print publication. The magazine is an authorized, unofficial publication published under Army Regulation 360-1, for all members of the Department of Defense and the general public.

The Army Research Laboratory is part of the U.S. Army Research, Development and Engineering Command, which has the mission to empower the Army and joint warfighter with technology and engineering solutions that ensure decisive capabilities for unified land operations. RDECOM is a major subordinate command of the U.S. Army Materiel Command.

Related Documents:

<b>Army Technology Magazine</b> [PDF]

Related Links:

<b>Online:</b> Army Technology Magazine

Army.mil: Science and Technology News

Social Sharing