Information overload! How many people have suffered from the feeling? A 2009 study, published by the University of California, San Diego, stated that an average American in 2008 consumed an average of 34 gigabytes of information every day from more than 20 different sources. And this was before the smartphone became ubiquitous. Such a deluge of information could overload even a power-ful computer, let alone the average American.

As information technology has become more available to the military, it presents Soldiers in complex operational situations with sig-nificantly more information than in the past, and in a broader variety. Just as the average American can be overwhelmed by data, Soldiers receiving information from multiple sources in addition to their own senses can suffer from information overload, decision gridlock and mental exhaustion.

On the battlefield, Soldiers cannot afford to be mentally or physically fatigued. They do not have the leisure to sort through every bit of information, or the time to judge the value of the information received. Yet Soldiers must do these things, and they must do them quickly and decisively, constantly adapting to the changing situation. At the same time, information is often clouded by the "fog of war," limiting the Soldier's ability to make reality-based decisions on which their lives and others' depend.

To allow maximum latitude to exercise individual and small-unit initiative and to think and act flexibly, Soldiers must receive as much relevant information as possible, as quickly as possible. Sensor technologies can provide situational awareness by collecting and sorting real-time data and sending a fusion of information to the point of need, but they must be operationally effective. Augmented reality (AR) and mixed reality (MR) are the solutions to this challenge. AR and MR technologies have shown that they make sensor systems operationally effective.

DIGITAL, REAL WORLDS UNITE

AR digitally places computer-generated or real-world sensory content on top of a Soldier's view of the physical, real-world environ-ment. In MR, the scanned information about the user's physical environment becomes interactive and digitally manipulable. AR and MR function in real time, bringing the elements of the digital world into a person's perceived real world and thus enhancing their current perception of reality. Examples of AR and MR familiar to any National Football League fan are the blue and yellow overlays that appear on the television screen showing the line of scrimmage and the first down line, respectively. This overlay is intuitive and designed not to distract from the game, requiring no training and significantly enhancing the fan's experience.

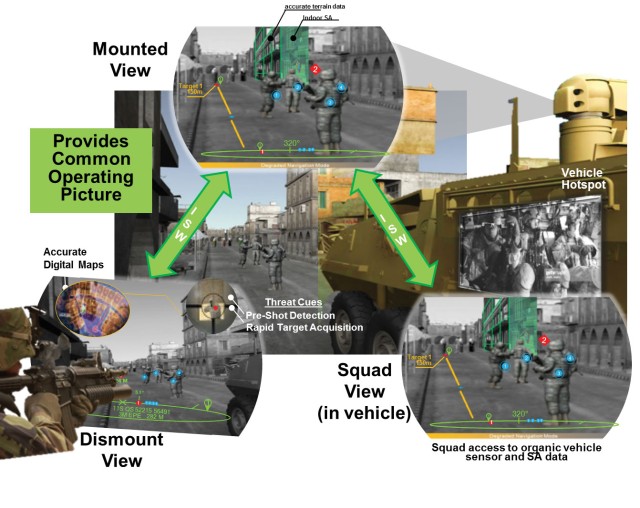

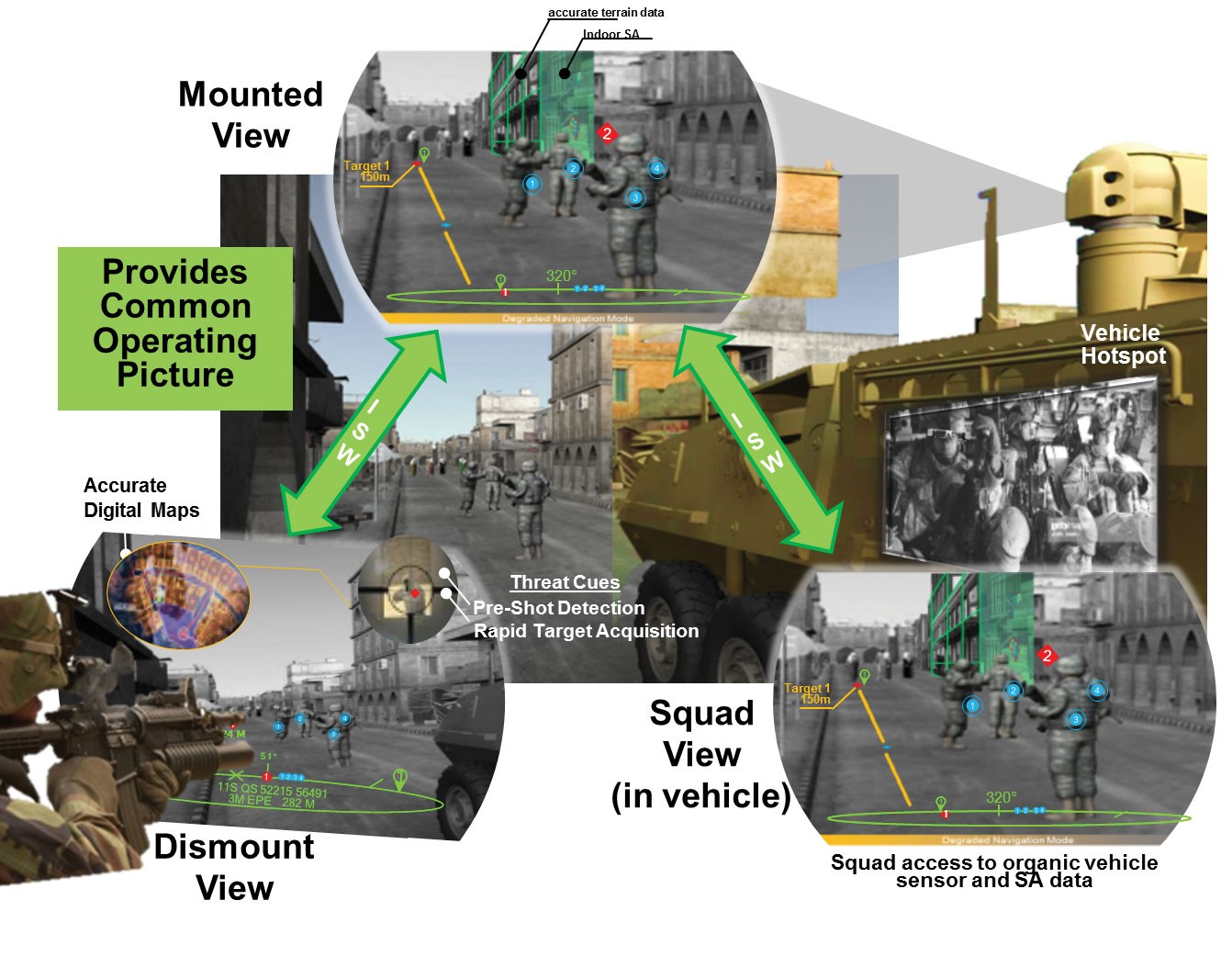

On a Soldier's display, AR can render useful battlefield data in the form of camera imaging and virtual maps, aiding a Soldier's navi-gation and battlefield perspective. Special indicators can mark people and various objects to warn of potential dangers. Soldier-borne, palm-size reconnaissance copters with sensors and video can be directed and tasked instantaneously on the battlefield at the lowest military echelon. Information can be gathered by multimodal (visual, acoustic, LIDAR or seismic) unattended ground sensors and transmitted to a command center, with AR and MR serving as a networked communication system between military leaders and the individual Soldier.

When used appropriately, AR and MR should not distract Soldiers but will give pertinent information immediately, so that a Soldier's decision will be optimal and subsequent actions relevant and timely. AR and MR allow for the overlay of information and sensor data into the physical space in a way that is intuitive, serves the point of need and requires minimal training to interpret. Thus both in-formation overload and the fog of war are diminished.

INFORMATION IS POWER

On the future battlefield, increased use of sensors and precision weapons by U.S. adversaries, as well as by the U.S., will threaten the effectiveness of traditional 20th century methods of engagement. Detection will be more difficult to avoid, and deployed forces will have to be flexible, using multiple capabilities and surviving by reacting faster than the adversary.

As networks of sensors integrate with greater numbers of autonomous systems, the need for faster decision-making will increase dra-matically. With autonomous systems becoming more prevalent on the battlefield, adversaries who do not have the same human-in-the-loop rules of engagement may be quicker than the U.S. to effectuate lethal responses. Because speed in decision-making at the lowest military echelon is critical, accelerating human decision-making to the fastest rates possible is necessary to maximize the U.S. military's advantage.

AR and MR are the underpinning technologies that will enable the U.S. military to survive in complex environments by decentralizing decision-making from mission command and placing substantial capabilities in Soldiers' hands in a manner that does not overwhelm them with information. As such, the Army has identified AR and MR as innovative solutions at its disposal as it seeks to increase Soldier safety and lethality as a priority of its modernization strategy.

MEETING THE CHALLENGES

The challenge for AR and MR is to identify and overcome the technical barriers limiting their operational effectiveness to the Soldier. For example, as Soldiers' operational information needs become more location-specific, the need for AR and MR to provide real-time, immediate georegistration will be increasingly important. To prevail in this near-term technical challenge and several others like it, the Army research and development (R&D) community is investing in the following technology areas:

• Technologies for reliable, ubiquitous, wide-area position tracking that provide accurate self-calibration of head orientation for head-worn sensors.

• Ultralight, ultrabright, ultra-transparent display eyewear with wide field of view.

• Three-dimensional viewers to provide the Soldier with battlefield terrain visualization, incorporating real-time data from un-manned aerial vehicles and the like.

In the mid term, R&D activities are focusing on:

• Manned vehicles developed with sensors and processing capabilities for moving autonomously, tasked for Soldier protection.

• Robotic assets, teleoperated, semi-autonomous or autonomous and imbued with intelligence, with limbs that can keep pace with Soldiers and act as teammates.

• Robotic systems that contain multiple sensors that respond to environmental factors affecting the mission, or have self-deploying camouflage capabilities that stay deployed while executing maneuvers.

• Enhanced reconnaissance through deep-penetration mapping of building layouts, cyber activity and subterranean infrastruc-ture.

In the far term, the R&D community can make a dent in key technological challenges once AR and MR prototypes and systems have seen widespread use. Research on Soldier systems will help narrow the set of choices, explore the options and reveal available actions and resources to facilitate mission success. This research will focus on automation that could track and react to a Soldier's changing situation by tailoring the augmentation the Soldier receives and by coordinating across the unit.

In more long-term development, sensors on Soldiers and vehicles will provide real-time status and updates, optimizing individually tailored performance levels. Sensors will provide adaptive camouflage for the individual Soldier or platform in addition to reactive self-healing armor. The Army will be able to monitor the health of each Soldier in real time and deploy portable autonomous medical treatment centers using sensor-equipped robots to treat injuries. Sensors will enhance detection through air-dispersible microsensors, as well as microdrones with image-processing capabilities.

In addition to all of the aforementioned capabilities, AR and MR will revolutionize training. Used as a tactical trainer, AR and MR will empower Soldiers to train as they fight. For example, Soldiers soon will be able to use real-time sensor data from unmanned aeri-al vehicles to visualize battlefield terrain, providing geographic awareness of roads, buildings and other structures before conducting their missions. They will be able to rehearse courses of action and analyze them before execution to improve situational awareness. AR and MR are increasingly valuable aids to tactical training in preparation for combat in complex and congested environments.

CONCLUSION

Currently, several Army laboratories and centers are working on cutting-edge research in the areas of AR and MR with significant success. The work at the U.S. Army Research, Development and Engineering Command and the U.S. Army Engineer Research and Development Center (ERDC) is having a significant impact in empowering Soldiers on the ground to benefit from data supplied by locally networked sensors.

AR and MR are the critical elements required for integrated sensor systems to become truly operational and support Soldiers' needs in complex environments. It is imperative that both technologies mature sufficiently to enable Soldiers to digest real-time sensor in-formation for decision-making. Solving the challenge of how and where to use augmented reality and mixed reality will enable the military to get full value from its investments in complex sensor systems.

For more information or to contact the authors, go to www.cerdec.army.mil.

DR. RICHARD NABORS is associate director for strategic planning and deputy director of the Operations Division at the U.S. Army CERDEC Night Vision and Electronic Sensors Directorate (NVESD) at Fort Belvoir, Virginia. He holds a doctor of management in organizational leadership from the University of Phoenix, an M.S. in management from the Florida Institute of Technology and a B.A. in history from Old Dominion University. He is Level I certified in program management.

DR. ROBERT E. DAVIS is the chief scientist and senior scientific technical manager for geospatial research and engineering at ERDC, headquartered in Vicksburg, Mississippi, with laboratories in New Hampshire, Virginia and Illinois. He holds a Ph.D. in geography, an M.A. in geography and a B.A. in geology and geography, all from the University of California, Santa Barbara.

DR. MICHAEL GROVE is principal deputy for technology and countermine at NVESD. He holds a doctor of science in electrical engineer-ing and an M.S. in electrical engineering from the University of Florida, and a B.S. in general engineering from the United States Military Academy at West Point. He is Level III certified in engineering and in program management.

This article is published in the January -- March 2018 Army AL&T magazine.

Related Links:

U.S. Army Acquisition Support Center

How Much Information? 2009 Report on American Consumers

U.S. Army Engineer Research and Development Center

U.S. Army's Communications-Electronics Research, Development and Engineering Center

Social Sharing