"Our focus areas for the [fiscal year 2013] budget demonstrate our concerted effort to establish clear priorities that give the Nation a ready and capable Army while being good stewards of all our resources….With a leaner Army, we have to prioritize and also remain capable of meeting a wide range of security requirements."

--Secretary of the Army

John M. McHugh

and Chief of Staff of the Army

Gen. Raymond T. Odierno

"The Army must continually adapt to changing conditions and evolving threats to our security. An essential part of that adaptation is the development of new ideas to address future challenges."

--Chairman of the

Joint Chiefs of Staff

Gen. Martin E. Dempsey

The Army's unit status report (USR) personnel readiness metrics are assessed using the criteria prescribed in Army Regulation (AR) 220-1, Army Unit Status Reporting and Force Registration--Consolidated Policies. These metrics directly support the calculation and determination of resource measurements, capability assessments, and overall assessments that are required to be reported.

The current method of determining these metrics results in a product that does not adequately assess the Army's ability to maintain strategic land power capabilities. Specifically, the available duty military occupational specialty qualified (DMOSQ) metric does not measure capability; it measures an administrative process. This miscalculation has the following unintended negative consequences:

• The Army unnecessarily reports lower readiness assessment (RA) levels and lower yes, qualified yes, or no (Y/Q/N) assessment ratings to the Joint Chiefs of Staff within the Chairman's Readiness System.

• Measured units report lower C-levels to higher headquarters relative to their ability to accomplish core functions and designed capabilities. Measured units are Army units, organizations, and installations that are required by AR 220-1 to report their resource measurements and capability assessments. The C-level readiness assessment reflects the unit's ability to accomplish core functions, provide designated capabilities, and execute the standardized mission-essential tasks.

• The Army factors in inaccurate capability variables during its planning, programming, budgeting, and execution process planning phase.

• The Army considers invalid benchmarks when making decisions to adjust strategic doctrine, organization, training, materiel, leadership and education, personnel, and facilities (DOTLMPF) levels to increase future readiness.

As we move toward a leaner Army and tighter budget constraints, we must adjust how we assess personnel readiness so that the Army is appropriately reporting its capabilities and making decisions with useful variables at the strategic, operational, and tactical levels.

For the purpose of this article, the term Soldier refers to enlisted personnel, warrant officers, and officers. MOS refers to the military occupational specialties and branches within the enlisted and officer corps and grade refers to their ranks.

MEASURING THE P-LEVEL

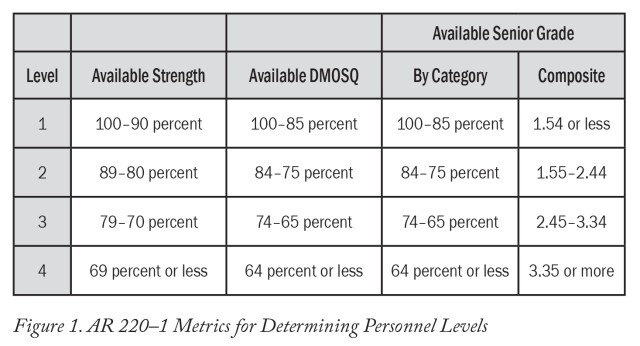

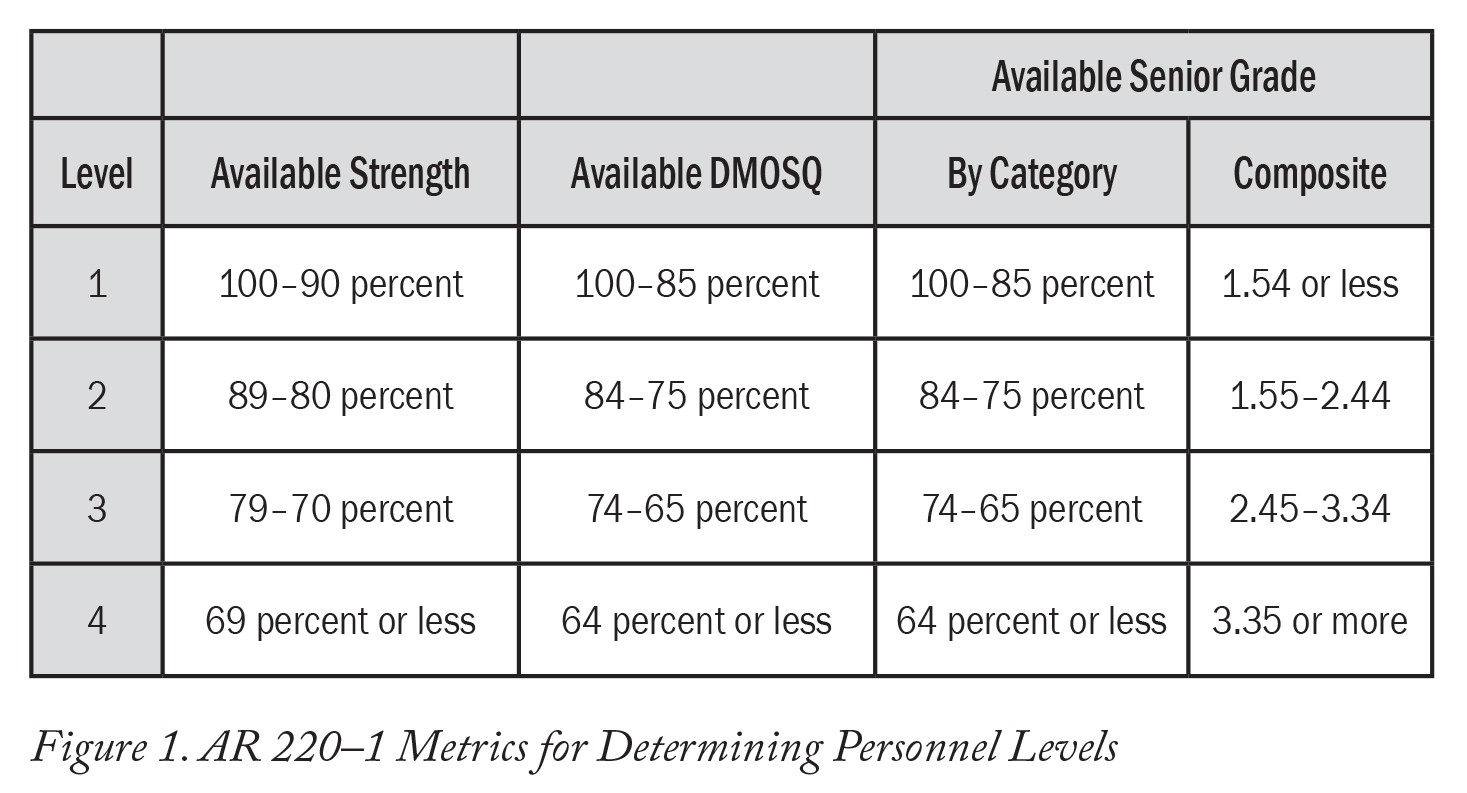

The personnel level (P-level) is one of four areas a unit measures that factor into its overall C-level, which is the overall assessment of core functions and capabilities. The Army measures its P-level by comparing available strength, available DMOSQ, and available senior-grade composite-level metrics as defined in AR 220-1. These are determined as follows:

• Available strength is determined by dividing the available personnel by the required personnel.

• Available DMOSQ is calculated by dividing the number of currently available assigned and attached Soldiers considered DMOSQ by the number of required personnel.

• Available senior-grade composite level is determined by averaging the applicable category levels and then applying the results in a reference table to identify the composite level.

The unit reports its P-level using the metric with the lowest level as noted in figure 1. For example, if a unit's available strength is 91 percent (P-1) and its available DMOSQ is 73 percent (P-3), the unit must report P-3 in its monthly USR.

A unit's lowest recorded level in any of its individually measured resource areas (personnel, equipment and supplies on hand, equipment condition, and training) will be its C-level. Therefore, a low P-level derived from an invalid DMOSQ metric will drive down a C-level.

The Army established the available DMOSQ metric without a Title 10 or regulatory mandate. Subsequently, the Army routinely reports its personnel readiness lower than it should because most of the units reporting below P-1 do so because their available DMOSQ is in the P-2 or lower range.

Classifying units as P-2 and lower because DMOSQ Soldiers are unavailable hides units that need help with available strength and senior-grade deficiencies. The Army as a whole loses countless man-hours engaging P-2 and lower concerns that the unavailability of DMOSQ Soldiers unnecessarily creates.

I will provide evidence supporting this assertion, but first it is important to understand contextually the Army's requirement to report its capability assessment and how measuring the wrong metric can have negative strategic implications.

READINESS REPORTING

Title 10 directs the secretary of defense to "establish a comprehensive readiness reporting system for the Department of Defense" that will "measure [personnel readiness] in an objective, accurate, and timely manner." More specifically, on a monthly basis the Department of Defense must measure "the capability of units (both as elements of their respective armed force and as elements of joint forces)…, critical warfighting deficiencies in unit capability," and "the level of current risk based upon the readiness reporting system relative to the capability of forces to carry out their wartime missions."

The secretary of defense executes the Title 10 mandate through Department of Defense Directive (DODD) 7730.65, Department of Defense Readiness Reporting System (DRRS), and DODD 7730.66, Guidance for the Defense Readiness Reporting System. DODD7730.65 "establishes a capabilities-based, adaptive, near real-time readiness reporting system," and DODD 7730.66 instructs service secretaries to "develop and monitor task and resource metrics to measure readiness and accomplish core and assigned missions" monthly.

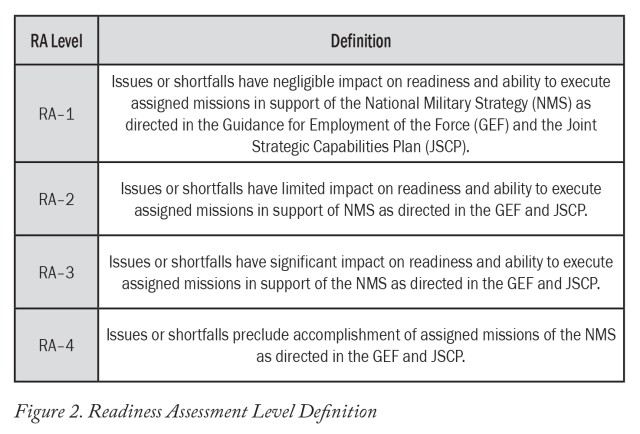

The chairman of the Joint Chiefs of Staff (CJCS) established the Chairman's Readiness System to accomplish the secretary of defense's mandate to "measure the preparedness of our military to achieve objectives as outlined in the National Military Strategy." Units use the Global Status of Resources and Training System (GSORTS) and DRRS to capture data and report readiness. The CJCS uses the quarterly Joint Force Readiness Review as the vehicle to apply the services' RAs from GSORTS and DRRS to an overall RA, relative to the ability of the services to support the National Military Strategy. (See figure 2.)

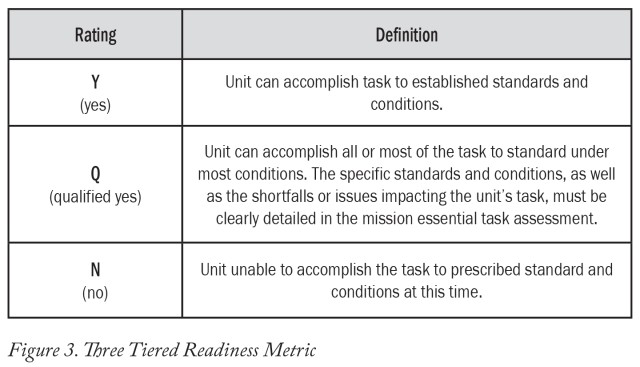

The Joint Force Readiness Review further requires each service to assess its ability to accomplish a task to standard under conditions specified in its assigned joint mission essential tasks and assigned mission essential tasks using a Y/Q/N rating. (See figure 3.)

The purpose of addressing GSORTS, DRRS, and the Joint Force Readiness Review is to highlight the complexities involved in assessing and reporting personnel readiness at the strategic level and the importance of using relevant metrics at the input level. As depicted in figure 1, personnel status measurements cascade into capability assessments at the strategic level that have national command authority repercussions.

Figure 1 can also help visualize how a P-level acquired from irrelevant metrics will affect the C-level, ultimately affecting how services derive RA and Y/Q/N capability levels.

CJCS Instruction (CJCSI) 3401.02B, Force Readiness Reporting, is the first document to establish P-level metrics. It mandates two joint metrics and offers one that is optional. The Army uses all three: total available strength, critical personnel, and critical grade fill.

TOTAL AVAILABLE STRENGTH. This required metric is the total available personnel divided by required personnel.

CRITICAL PERSONNEL. This required metric consists of the designated critical MOS available strength divided by the critical MOS structured strength.

CRITICAL GRADE FILL. This optional metric, if service directed, calculates a critical grade fill P-level. The Army directed available senior-grade strength to calculate this metric.

The Army directed available DMOSQ to achieve this mandate. This is where the Army misses the mark by measuring an administrative process instead of a capability.

ARMY PERSONNEL READINESS REPORTING

Although the CJCSI directs the services to measure critical personnel, it does not require available DMOSQ to do so. The Army, in choosing available DMOSQ and the method to measure the metric, not only increases the requirement but also uses a flawed method to execute it. As a result, the Army does not measure its personnel capability; it measures its ability to execute a process.

The Army's current method does not determine if the unit has all of the Soldiers it is authorized by MOS and grade; it measures a process in which a battalion human resources specialist is supposed to conduct a transaction in the Electronic Military Personnel Office (eMILPO) to align, or "slot," a Soldier's name against the correct paragraph and line number in the unit's modified table of organization and equipment (MTOE).

In many cases the units have every MOS and grade required by their authorization document, but they have failed to properly code them in an Army personnel software program. As a result, units have the personnel capabilities required but the current reporting standard mandated a misleading assessment to senior Army military and civilian leaders.

DMOSQ DISADVANTAGES

Measuring a unit's ability or inability to slot a Soldier correctly in eMILPO does not measure capabilities. Moreover, the available DMOSQ metric measures personnel available within the category, exacerbating the problem by essentially counting unavailable personnel twice: once in the available strength metric and again in the available DMOSQ metric.

Therefore, we must question the Army's use of available DMOSQ as one of the metrics to determine P-levels, and we must determine the advantages and disadvantages of this process. My research neither identified an advantage for using available DMOSQ nor determined the original rationale behind the decision to use it to execute the CJCSI requirement to measure critical personnel.

In fact, a senior Army officer with 31 years of service stated that the Army has been using available DMOSQ as a metric since he was a second lieutenant and he does not know why. His conclusion was that it fell into the unfortunate category of "that's how we have always done it."

Using available DMOSQ as a metric has several disadvantages, with the initial being that units report lower P-levels and thus lower C-levels relative to their ability to accomplish core functions and designed capabilities. Consequently, senior Army leaders make strategic decisions based on distorted data. This leads to the Army reporting lower RA levels and lower Y/Q/N assessments within the Chairman's Readiness System.

Other disadvantages include the Army using inaccurate capability variables during the planning phase of the planning, programming, budgeting, and execution process; considering invalid benchmarks when making decisions about DOTLMPF changes; masking units that need help with available strength and available senior-grade deficiencies; and losing countless man-hours while engaging P-2 and lower concerns.

Remembering that the C-level is derived from the lowest level recorded in any of the unit's individually measured resource areas and that the current available DMOSQ method measures an administrative process, it is imperative that the Army's metrics and methodologies used to assess a unit's C-level are altered so it best portrays actual capability assessments, not process assessments.

An example of distorted data is a Human Resources Command (HRC) G-3 analysis on the October 2012 USR. Of the 127 rotational forces, 26 (20 percent) reported a P-1 status, and 101 units (80 percent) reported P-2 or lower. Of 101 units, 31 reported P-2 or lower because their available DMOSQ percentage was below 85 percent. If the units had used this article's recommended metric vice the available DMOSQ, 57 units (45 percent) would have been P-1, more than doubling the number of units with P-1 levels.

The HRC commander noted that virtually every unit affected by this calculation had their MOSs and grades assigned to the unit, but the units had not slotted the Soldiers correctly in eMILPO. Without the available DMOSQ metric, the Army would have a more useful assessment of its capabilities to perform core functions and assigned missions and would be able to better focus resources to aid the 70 rotational force units that did not reach P-1 because of unavailable strength or unavailable senior-grade personnel.

The December 2012 USR analysis continues this trend. An HRC Enlisted Personnel Management Directorate analysis indicates that 48 of the 127 units (38 percent) reported P-1. Thirty-eight of the 79 units reporting P-2 or lower did so because of unavailable DMOSQ. Of the 38 units reporting P-2 or lower, 28 would have been P-1 if measured by the proposed assigned and authorized metric. This would have increased P-1 units to 76, or 60 percent, an increase of 22 percent.

DISSATISFACTION WITH DMOSQ

Removing the available DMOSQ metric would provide more relevant personnel capability assessments and would allow the Army to focus resources to assist the 51 units that did not make P-1 because of available strength and available senior-grade composite levels.

In the Army G-1 information paper, "Improving the Duty Occupational Specialty Qualification (DMOSQ) metric within the Unit Status Report (USR)," Chief Warrant Officer 5 Andre Davis, Lt. Col. Tom Burke, and Lt. Col. Bill Haas recommend changing available DMOSQ to a more relevant metric. They contend that the available DMOSQ metric is "the most restrictive personnel readiness indicator of the three P-level metrics… and the available DMOSQ metric provides an inaccurate readiness assessment."

I agree that the available DMOSQ provides an inaccurate assessment, but this article's appeal for change is not because the metric is restrictive. Restrictive is acceptable if it measures a capability and is the right metric to meet the P-level requirement defined in the Force Readiness Reporting CJCSI.

The Army G-1 information paper further supports this article's assertion by stating that the available DMOSQ component of the P-level metric is the cause of most units' low P-levels.

GENERAL OFFICER STEERING COMMITTEE REVIEW

A December 2012 strategic readiness general officer steering committee (GOSC) discussed removing the available DMOSQ as a USR metric, stating "rules for calculating the MOSQ [available DMOSQ] metric in Army units promotes artificially lower P-levels, hence creating conditions that may overstate [the] magnitude of degraded readiness." The GOSC identified personnel incorrectly slotted in eMILPO and the DMOS box not checked in the Net-Centric Unit Status Report (NetUSR) application as two reasons units do not report P-1.

During the GOSC, key data highlighting the negative impact of using available DMOSQ was found in a Forces Command (FORSCOM) review of P-levels that 55 FORSCOM brigade combat teams and combat aviation brigades reported on their USRs during the six months leading up to their deployments from 2008 to 2012. The review aggregated the 55 units' USRs and discovered that 70 percent of the brigades reported below P-1 because of available DMOSQ, yet every unit was P-1 on its first deployed USR. This further confirms that the available MOSQ metric does not measure the capability of a unit to execute its core functions and assigned missions. The units had the MOSs and grades required (the capability) to accomplish their assigned missions. However, they were constrained by the AR 220-1 requirement to use available DMOSQ as a metric to assess and report personnel readiness in the months leading up to their deployment.

Having been a division G-1 for 36 months, I know that the P-levels these units reported before their deployment are common and invariably create angst and scrutiny at every level, resulting in untold man-hours of staff responding to unnecessary questions. The extra work created by these inaccurate P-levels created by using available DMOSQ keeps commanders and staffs at every level from spending more time preparing their units to deploy.

RECOMMENDATION

The Army should replace the available DMOSQ metric with an assigned and authorized metric with the following instructions:

• The assigned and authorized metric is defined as the total assigned strength divided by the unit's MTOE authorizations, to include the explicit mitigation strategies defined in the Headquarters, Department of the Army (HQDA), Fiscal Year 2013 to 2015 (FY13-15) Active Component Manning Guidance (ACMG).

• Slot lower enlisted personnel, noncommissioned officers, warrant officers, and officers correctly in eMILPO.

• Use officer and enlisted substitutions within the same grade, one grade lower, or two grades higher to fill shortages.

• Count promotable populations as the next higher grade.

• Maximize grade and MOS substitutions to fill critical needs.

• Execute this metric precisely; it should measure the number of assigned MOSs and grades against the MOS and grade authorizations to prevent an excess in one MOS or grade to increase the percentage and thus the P-level.

Following these instructions, a rating of 94 percent means that 94 percent of the authorizations on that unit's MTOE are filled by exact MOS and grade or in accordance with the HQDA FY13-15 ACMG substitution rules. That is a true measurement of capability.

COUNTERARGUMENT

I suspect the primary argument against replacing available DMOSQ with assigned and authorized is that it does not give Reserve component (RC) units the ability to accurately report Soldiers who have not completed the training required to be MOSQ. The Active component (AC) does not have this challenge since AC Soldiers are reported in a training, transit, hold, and student status until they are fully trained and report to the unit; only then do units report them on their USRs. However, RC units can have Soldiers assigned to them who have not completed their training and are not DMOSQ.

NetUSR provides the solution for RC units. Currently RC data is imported into NetUSR and RC units can indicate their MOSQ Soldiers who have not completed the required MOS qualification training.

The NetUSR software functionality allows the unit to adjust the DMOSQ data for pay grades E-3 and below to accurately report their status by simply clearing the DMOS check box. This is needed when an RC Soldier goes to basic training and returns home before attending advanced individual training or when he transfers to a new MOS and needs additional training to become DMOSQ.

This process will not change. Using the assigned and authorized metric, the RC will continue to import its data into NetUSR and uncheck the DMOS box for those E-3s and below who are not DMOSQ. This will remove the Soldier from the authorization line and result in the same capability measurement the AC uses. Both AC and RC will measure their true personnel capability while allowing the RC to know which Soldiers are not DMOSQ and need training.

Some may believe that available DMOSQ is the correct metric and method to measure personnel readiness. This article clearly presents its failed method both from a logical review of what it measures and from empirical data.

Measuring a process does not measure capability. Every Soldier is a capability, and the unit's MTOE identifies by paragraph and line number the exact capabilities the unit requires. The best way to measure that unit's capability is to measure if it has every Soldier assigned that is authorized, hence the proposed assigned and authorized metric.

Others may assert that the commander's ability to subjectively upgrade the C-level or A-level is sufficient to counter low P-levels that available DMOSQ creates. That assertion is flawed. It is clear that the available metric does not measure capability from the start. Measuring a process creates an invalid starting point from which a commander can consider a subjective upgrade. This renders any upgrade null and void.

Some may agree with the assigned and authorized metric but do not want to use the FY13-15 ACMG mitigation strategies as part of the metric. The chief of staff of the Army approved the ACMG as the rules of engagement for manning. This ensures consistency in how the Army distributes Soldiers to units, which is required when anyone defends a method.

One might argue that replacing available DMOSQ will take the focus off of the need for unit personnel officers to properly slot Soldiers in eMILPO, but that is misguided. Measuring the Army's capability is serious business. Senior civilian leaders make decisions with the readiness information the Army reports. Having proven that available DMOSQ does not measure our Army's personnel readiness correctly, it is imperative that the Army adopt the assigned and authorized metric in order to accurately measure capability.

Commanders can use other venues, such as the FORSCOM Personnel Readiness Review, to measure a unit's ability to properly slot a Soldier in eMILPO, and unit S-1s can run this report as frequently as their commanders require. The USR and the strategic decisions that it drives are not the places to measure an administrative function.

Lastly, some may assert that changing the metric simply makes the Army's P-1 "scores" look better. That claim holds no merit. The Chairman's Readiness System is about assessing and reporting capabilities. Simply put, the available DMOSQ metric does not measure capability. The proposed assigned and authorized metric measures capability. It has nothing to do with higher scores or looking better. It is about the Army executing the Title 10 mandate to "measure in an objective, accurate, and timely manner the capability of the armed forces."

COST OF NOT ADOPTING PROPOSAL

The cost of not adopting this proposal is simple and exacerbated by the current operational environment. It is simple in that it is clear that units are using a metric that measures a process and not a capability to assess and report its personnel capabilities at the highest levels. Rejecting this proposal means the Army will continue to make strategic internal decisions and recommendations to the Joint Staff and civilian leaders based on irrelevant information.

One has only to review the 2012 Army Posture Statement to see how the cost of not adopting this proposal is exacerbated by the current operational environment: The "global fiscal environment is driving defense budgets down for our partners and allies, as well as our Nation." The Army has more than 190,000 Soldiers committed in nearly 150 countries. Our military is drawing down from 570,000 to 490,000 personnel. The days of excesses are gone. The Army has to measure its capabilities correctly in order to shape the future force.

Secretary of the Army John McHugh and Gen. Raymond T. Odierno made the following statement to the Senate and House of Representatives:

"As we look to the future, the uncertainty and complexity of the global security environment demands vigilance. In these changing economic times, America's Army will join Department of Defense efforts to maximize efficiency by identifying and eliminating redundant, obsolete and or unnecessary programs, responsibly reducing end-strength and by evolving our global posture to meet future security challenges."

As noted in the Army's 2012 Posture Statement, in order to meet our nation's future security challenges in this difficult fiscal environment, the Army must challenge all of its current paradigms to ensure it is maximizing its resources in its task of sustaining "the Nation's Force of Decisive Action" and providing combatant commanders "with the capabilities, capacity and diversity needed to be successful across a wide range of operations."

Several areas beyond the scope of this article need to be reviewed to ensure that we are properly measuring and reporting personnel readiness to strategic leaders. Is the Army using the correct method to measure available senior-grade composite level? The Army's method is not prescribed by law or joint policy.

Why is the Army USR process reactive instead of predictive? The Army currently looks in the rearview mirror each month, preventing opportunities at the strategic level to shape the future. Why can a commander manually reslot Soldiers in NetUSR without it being tied to eMILPO, effectively presenting one capability measurement to the Army chief of staff (NetUSR) and a different measurement to HRC (eMILPO)? There can only be a single data point if the Army wants to maximize its limited resources.

Changing the available DMOSQ metric to an assigned and authorized metric in order to properly measure the Army's P-level will not solve all the challenges the Army faces in the days to come, but it is a step in the right direction. Every Soldier counts; every Soldier is a capability.

___________________________________________________________________________________________

Col. Jack Usrey is an Army War College fellow at the University of Texas at Austin. He holds master's degrees in organizational management and in national security and strategic studies. He is a graduate of the Jumpmaster Course, the Joint Combined Warfighter School, and the Naval Command and Staff College.

___________________________________________________________________________________________

This article was published in the January-February 2014 issue of Army Sustainment

magazine.

Related Links:

Army Sustainment Magazine Archives

Social Sharing